How it works: SENTIMENT

SENTIMENT allows users to get the sentiment (Positive, Negative, Neutral) of any kind of text data using a Sentiment Analysis model powered by large language models.

In this page, we will look at what exactly happens when you call SENTIMENT. 🤔

TL;DR:

SENTIMENTuses proprietary large pre-trained language models (Transformers) that have been fine-tuned on sentiment analysis data to derive the sentiment.

Overview

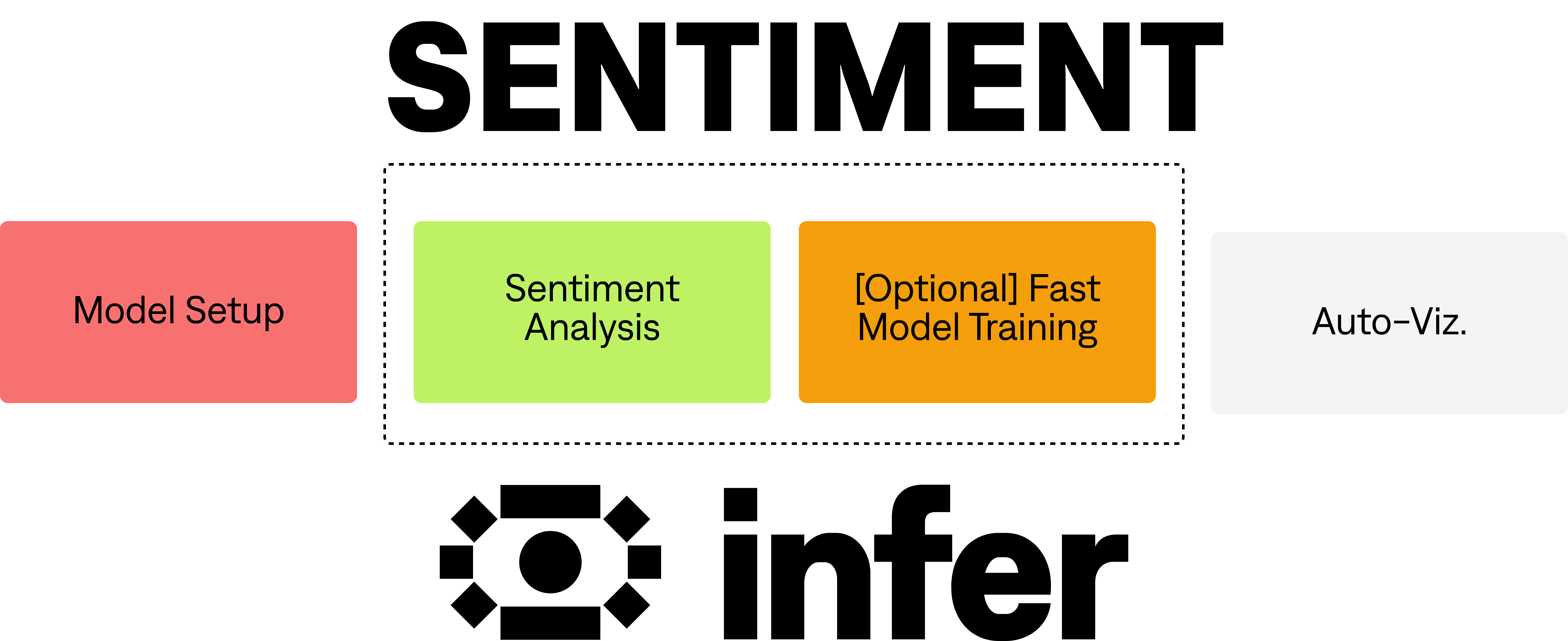

As outlined in the diagram above, there are 4 main processes that occur when calling SENTIMENT.

This does not include the added complexity of scaling infrastructure (setting up and running GPU clusters), the parsing of the statement to deconstruct and orchestrate which commands to run, or the interaction with the data sources or data consumers.

This first process of SENTIMENT begins when the relevant data has been retrieved from the data source.

These 4 processes for SENTIMENT are:

- Model Setup. The configuration of the model is decided, based on the optional input parameters.

- Sentiment Analysis. The sentiment model is used to predict the sentiment of the text.

- [Optional] Fast Model Training. Optionally, for large datasets, we train a model on-the-fly using a sample of predictions from the large sentiment model, to then scale sentiment predictions to millions of data points.

- Auto-viz. Generate relevant insights & visualisations, and return the results to the relevant platform.

In the next sections, we'll outline each process in detail, so you can understand exactly what is happening with confidence.

Setting Up the Sentiment Analysis Model

The first step in the SENTIMENT process is to set up the model. There are two main configuration options available for SENTIMENT:

- The model

versionfor the primary sentiment analysis. - An optional fast approximation method to handle large datasets (using

n_fast_samples). To achieve fast inference times, all text analysis models are mounted on GPUs.

Below, we'll delve deeper into how these configuration choices affect the sentiment analysis process.

Model Versions

The SENTIMENT model offers two main language model versions to choose from: amazon_reviews and twitter. To specify a version, simply use the version parameter when calling the function (e.g. SENTIMENT(txt_column, version='twitter')).

The language models are built using an efficient Transformer architecture that is pretrained on 1 billion sentence pairs (see https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2) and fine-tuned on relevant datasets. Different corpora of text require different fine-tuning approaches, and the available SENTIMENT model versions reflect this.

For instance, the twitter model is trained to work best with tweets, while amazon_reviews performs optimally on product reviews. The amazon_reviews version was trained on ~6 million Amazon US Reviews of Apparel (https://huggingface.co/datasets/amazon_us_reviews), whereas the twitter version was trained on ~45k tweets (https://aclanthology.org/S17-2088/).

We plan to introduce more model versions in the future.

Fast Sentiment Approximation

In certain cases, you may need to analyze a massive dataset of text (e.g. 100k+ data points). If so, the default SENTIMENT model may take hours to process the data. In such scenarios, you can use our fast sentiment approximation to scale sentiment analysis to millions of data points.

To use this feature, simply set n_fast_samples to the desired number of text samples. The function will then randomly select that many samples and use them to train a new text model. The model comprises a TF-IDF vectorizer and XGBoost.

Using the fast approximate model, the function can predict the sentiment of the remaining text in a fraction of the time needed for the default model. Note that the trade-off for this speed boost is accuracy; the more text samples you use, the more accurate the predictions will be, but the slower the processing time will be.

Other than that, the SENTIMENT function works just as it would without using n_fast_samples.

Sentiment Analysis

Sentiment analysis is a type of natural language processing (NLP) that involves using algorithms to identify the emotional tone of a piece of text. The aim is to determine whether the text expresses a positive, negative, or neutral sentiment. Sentiment analysis can be used in various applications, such as social media monitoring, customer feedback analysis, and market research.

We use the models described in the previous section as the language model in our sentiment analysis pipelines.

The whole pipeline for sentiment analysis using a large language model, such as the one deployed by Infer, typically involves the following 5 steps:

- Input text: The first step in the inference pipeline is to pass in the input text that needs to be analyzed for sentiment. The input can be a single sentence, a paragraph, or an entire document.

- Tokenization: The input text is tokenized, which involves breaking it down into individual words or subwords. This is done using a tokenizer, which is a component that is responsible for converting text into numerical input that can be fed into the model. Hugging Face provides various pre-trained tokenizers that can be used for different types of NLP tasks.

- Encoding: Once the input text has been tokenized, it needs to be encoded in a numerical format that can be processed by the model. The tokenized text is typically encoded using a technique called attention-based encoding, which involves assigning a vector representation to each token based on the context of the other tokens in the input.

- Prediction: After encoding the input, the model predicts the sentiment of the text. The output is typically a probability distribution over the possible sentiment classes, such as positive, negative, or neutral. The sentiment class with the highest probability is selected as the model's predicted sentiment for the input text.

- Output: The final step in the pipeline is to output the predicted sentiment. This can be in the form of a label (e.g., "positive," "negative," or "neutral") or a numerical score (e.g., a probability value). The output can be used for various downstream applications, such as sentiment analysis of social media posts, product reviews, or customer feedback.

The final prediction is written to prediction (e.g. Positive), and the probabilities are given for each class via probability_Positive, probability_Negative, and probability_Neutral.

Auto-viz

Automated visualisation for text analysis is under construction!